Outlying observations and leverage points

Jan Vávra

Exercise 12

Download this R markdown as: R, Rmd.

Outline of this lab session:

- Outlying observations

- Leverage points

- Cook’s distance

In a standard linear regression model we postulate a specific parametric form for the conditional mean of the response variable \(Y\) given a vector some explanatory variables \(\boldsymbol{X} \in \mathbb{R}^p\), for instance \[ Y | \boldsymbol{X} \sim (\boldsymbol{X}^\top\boldsymbol{\beta}, \sigma^2), \] where \(\boldsymbol{\beta} \in \mathbb{R}^p\) is the unknown parameter vector and \(\sigma^2 > 0\) is (usually) the unknown variance parameter. The normality assumption is not strictly needed.

In practical applications it can happen that some specific observation \(i \in \{1, \dots, n\}\) does not align well with the proposed model. This mis-alignment can be measured with respect to both axes

- the \(y\) axis representing the dependent variable \(Y\) - an outlying observation,

- the \(x\) axis representing the explanatory variable(s) - a leverage point.

Both may (or may not) have a crucial impact on the final model estimate and the corresponding inference.

Loading the data and libraries

library("mffSM") # plotLM()

library("MASS") # stdres()1. Outlying observations

For illustration purposes we will again consider a small dataset

mtcars with 32 independent observations. Firstly, we will

focus on outlying observations and their effect on the final fit (the

estimated parameters and model diagnostics).

Firstly, consider the following model:

summary(m1 <- lm(mpg ~ disp , data = mtcars))##

## Call:

## lm(formula = mpg ~ disp, data = mtcars)

##

## Residuals:

## Min 1Q Median 3Q Max

## -4.892 -2.202 -0.963 1.627 7.231

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 29.59985 1.22972 24.07 < 2e-16 ***

## disp -0.04122 0.00471 -8.75 9.4e-10 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 3.25 on 30 degrees of freedom

## Multiple R-squared: 0.718, Adjusted R-squared: 0.709

## F-statistic: 76.5 on 1 and 30 DF, p-value: 9.38e-10par(mar = c(4,4,2.5,0.5))

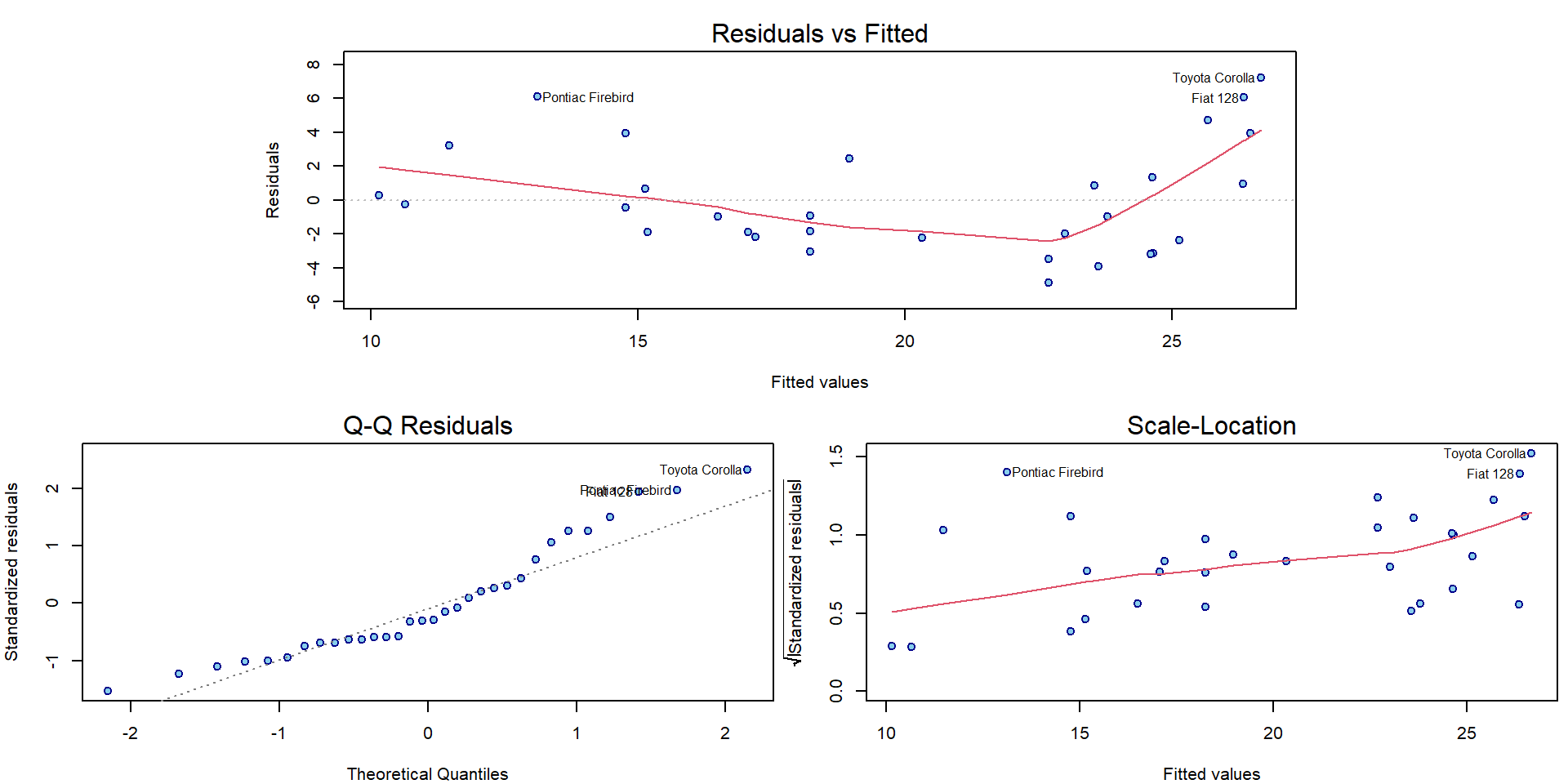

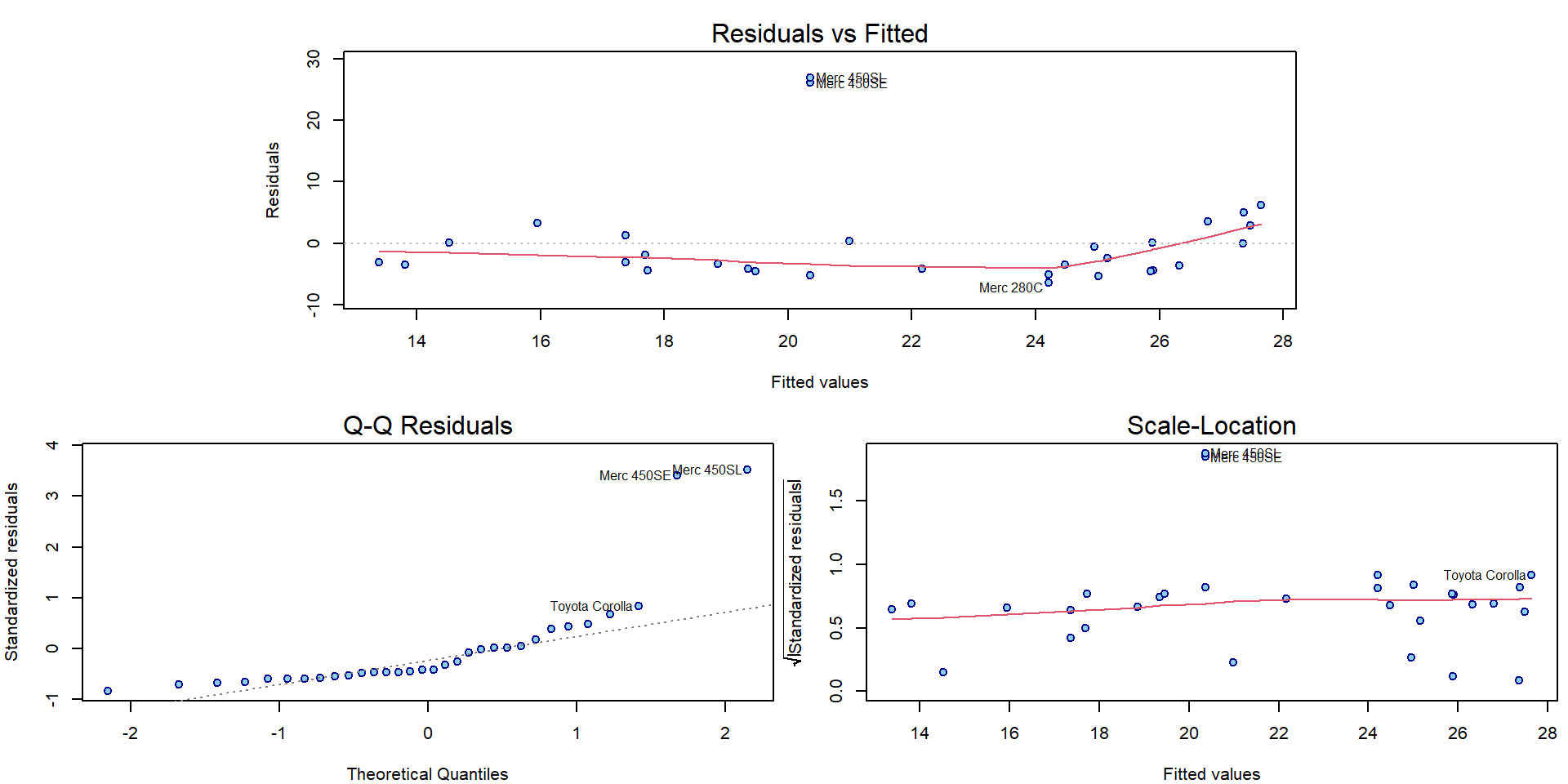

plotLM(m1)

In the following, we will introduce one outlying observation and we will investigate the effect of this outlier on the overall model.

mtcars_out1 <- mtcars

i1 <- 12

mtcars_out1$mpg[i1] <- mtcars$mpg[i1] + 30 # artificially created outlier

summary(m2 <- lm(mpg ~ disp , data = mtcars_out1))##

## Call:

## lm(formula = mpg ~ disp, data = mtcars_out1)

##

## Residuals:

## Min 1Q Median 3Q Max

## -5.65 -3.15 -1.80 1.49 27.10

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 29.88211 2.26422 13.20 5e-14 ***

## disp -0.03838 0.00868 -4.42 0.00012 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 5.99 on 30 degrees of freedom

## Multiple R-squared: 0.395, Adjusted R-squared: 0.375

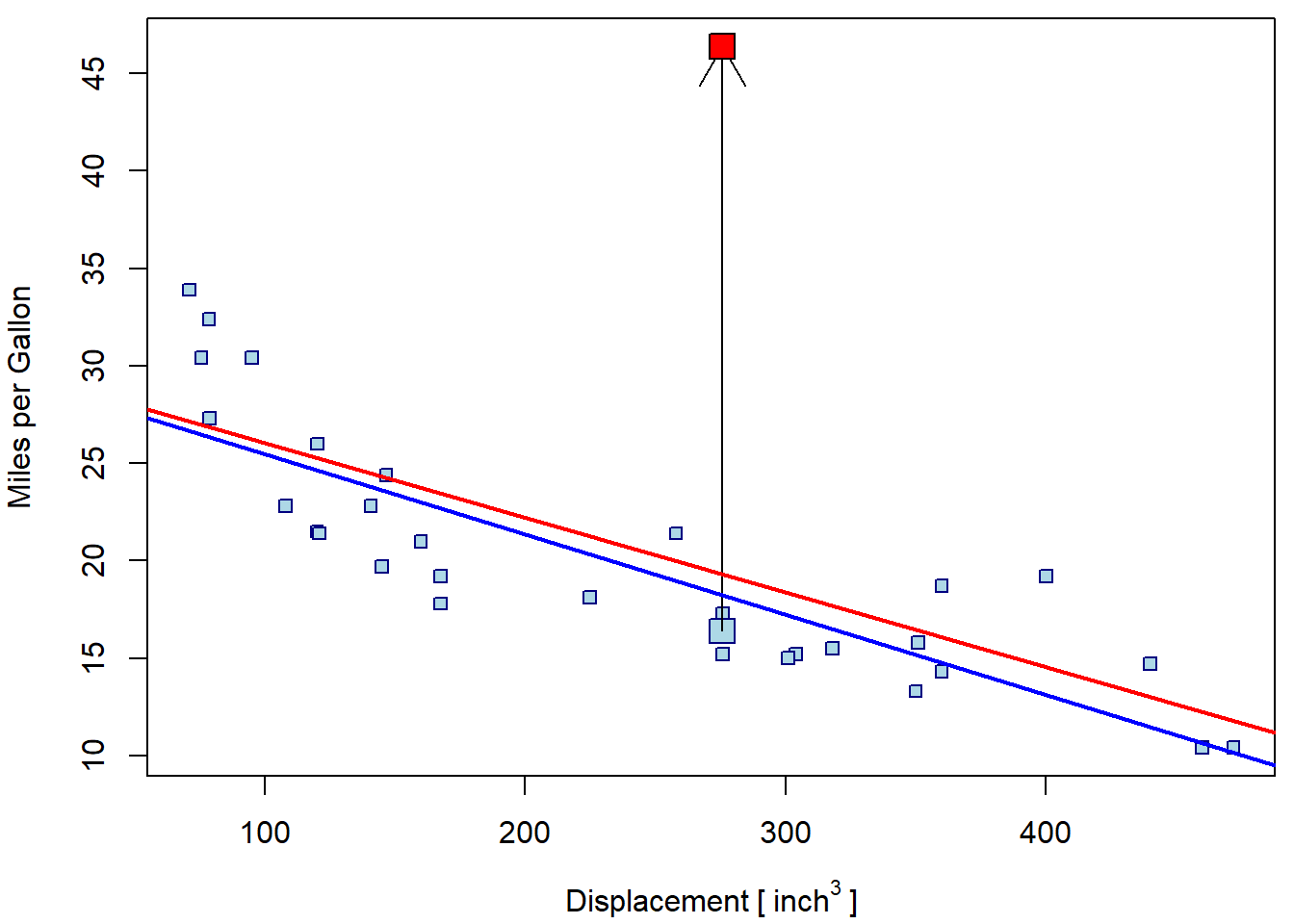

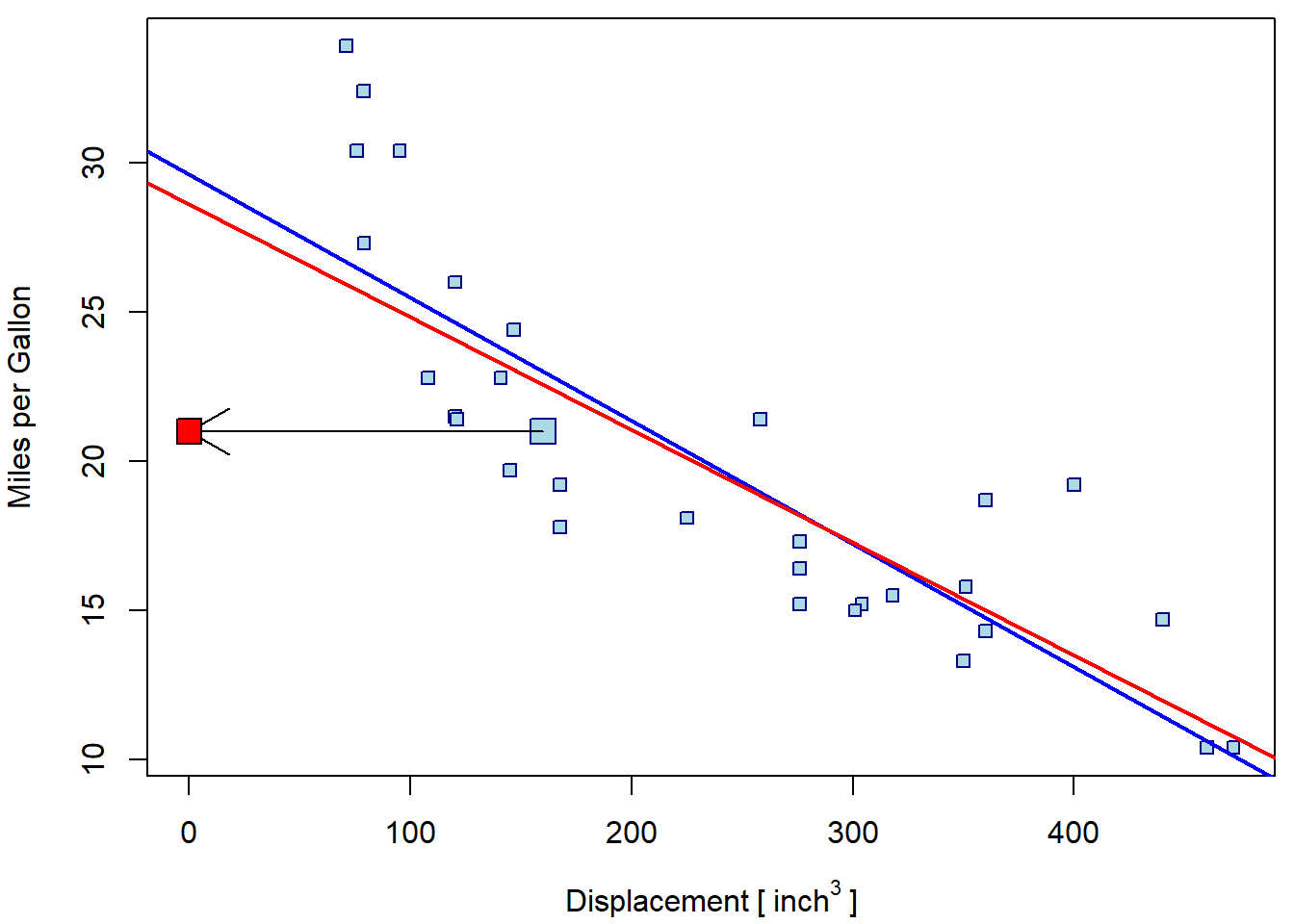

## F-statistic: 19.6 on 1 and 30 DF, p-value: 0.000118Both model can be compared visually:

par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plot(mpg ~ disp, data = mtcars, ylim = range(mtcars_out1$mpg),

xlab = expression(Displacement~"["~inch^3~"]"), ylab = "Miles per Gallon",

pch = 22, bg = "lightblue", col = "navyblue")

points(mtcars$mpg[i1] ~ mtcars$disp[i1], pch = 22, bg = "lightblue", col = "navyblue", cex = 2)

arrows(x0 = mtcars$disp[i1], y0 = mtcars$mpg[i1], y1 = mtcars_out1$mpg[i1])

points(mtcars_out1$mpg[i1] ~ mtcars_out1$disp[i1], pch = 22, bg = "red", cex = 2)

abline(m1, col = "blue", lwd = 2)

abline(m2, col = "red", lwd = 2)

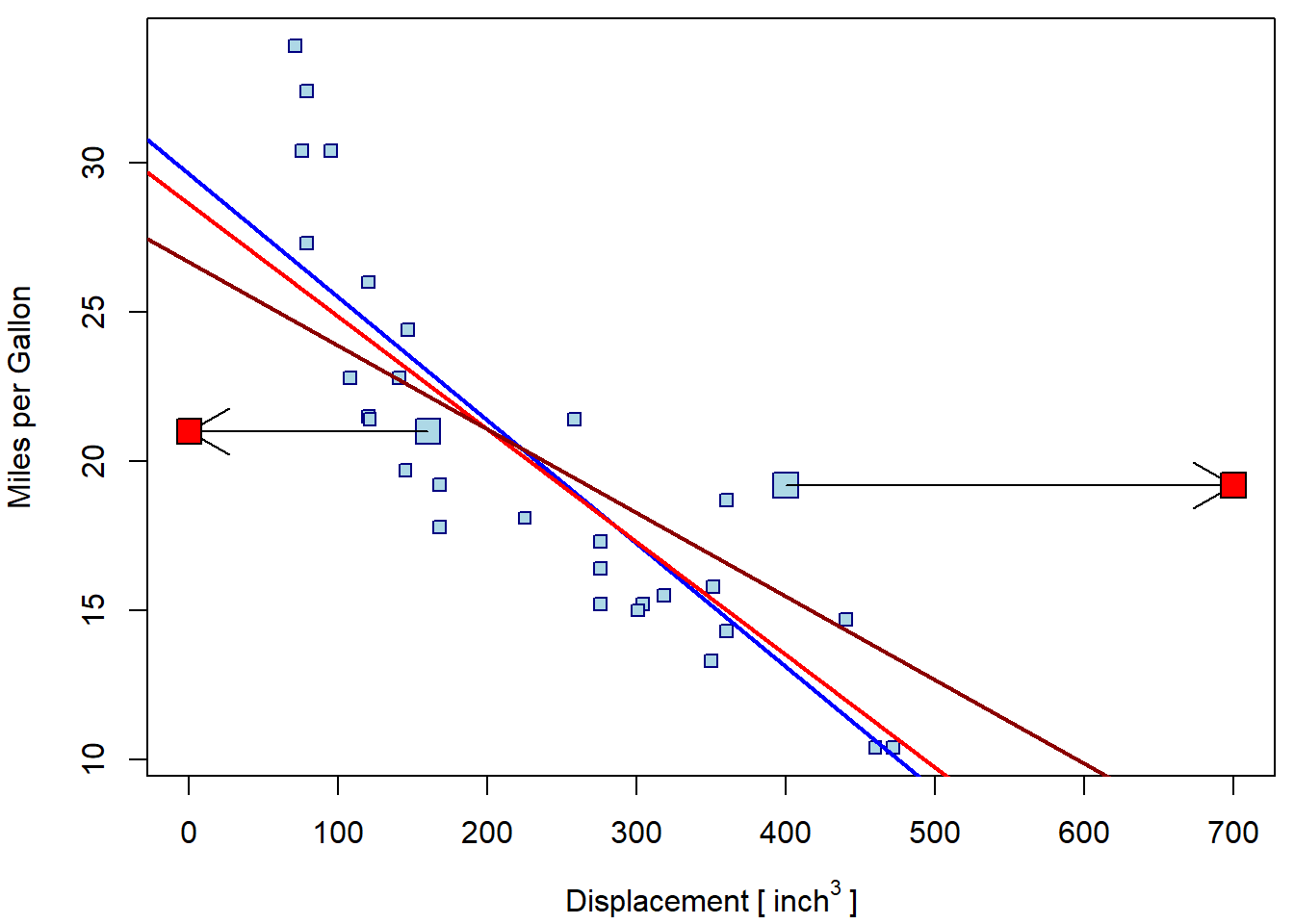

The effect of the second (artificial) outlier can be even more serious

mtcars_out2 <- mtcars_out1

i2 <- 13

mtcars_out2$mpg[i2] <- mtcars$mpg[i2] + 30

summary(m3 <- lm(mpg ~ disp , data = mtcars_out2))##

## Call:

## lm(formula = mpg ~ disp, data = mtcars_out2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -6.409 -4.220 -3.032 0.635 26.936

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 30.1644 2.9485 10.23 2.7e-11 ***

## disp -0.0355 0.0113 -3.15 0.0037 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 7.8 on 30 degrees of freedom

## Multiple R-squared: 0.248, Adjusted R-squared: 0.223

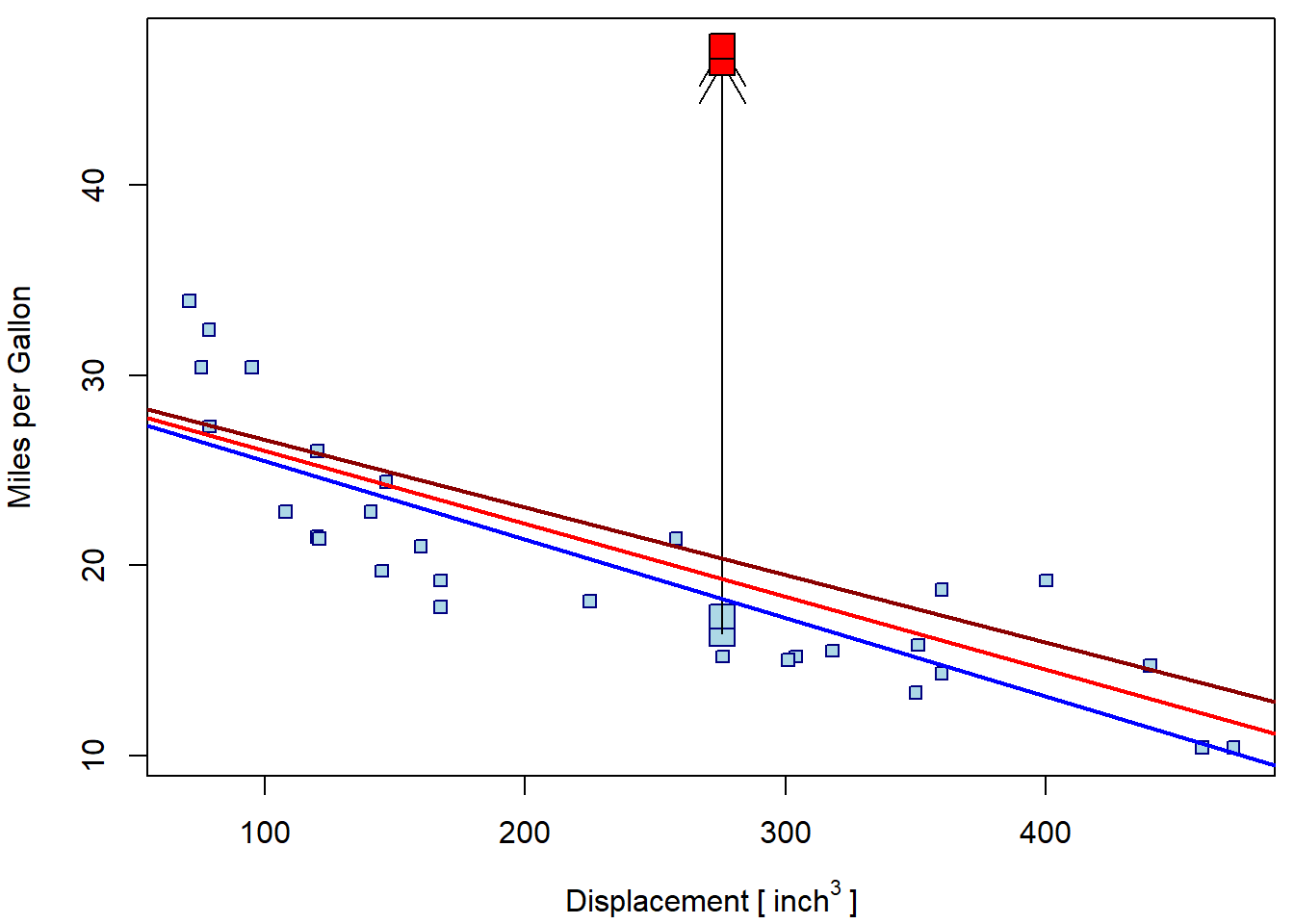

## F-statistic: 9.89 on 1 and 30 DF, p-value: 0.00373Graphically:

is <- c(i1, i2)

par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plot(mpg ~ disp, data = mtcars, ylim = range(mtcars_out2$mpg),

xlab = expression(Displacement~"["~inch^3~"]"), ylab = "Miles per Gallon",

pch = 22, bg = "lightblue", col = "navyblue")

points(mtcars$mpg[is] ~ mtcars$disp[is], pch = 22, bg = "lightblue", col = "navyblue", cex = 2)

arrows(x0 = mtcars$disp[is], y0 = mtcars$mpg[is], y1 = mtcars_out2$mpg[is])

points(mtcars_out2$mpg[is] ~ mtcars_out2$disp[is], pch = 22, bg = "red", cex = 2)

abline(m1, col = "blue", lwd = 2)

abline(m2, col = "red", lwd = 2)

abline(m3, col = "red4", lwd = 2)

Individual work

Consider the effect of just one outlying observation and try how much the original regression line can be changed by applying the effect of just one outlying observation.

Consider the effect of two outlying observations located in a way that both will compensate the effect of the other one.

Note, that the outlying observations artificially introduced in the datasets above are truly typical with respect to the displacement information (i.e., the \(x\) axis) and they are only atypical with respect to the response values.

Consider the vector of the estimated (mean) parameters and the vector of the fitted values (\(\widehat{\boldsymbol{Y}}\)) and compared these quantities in the model without outlying observations and in a model with some outliers.

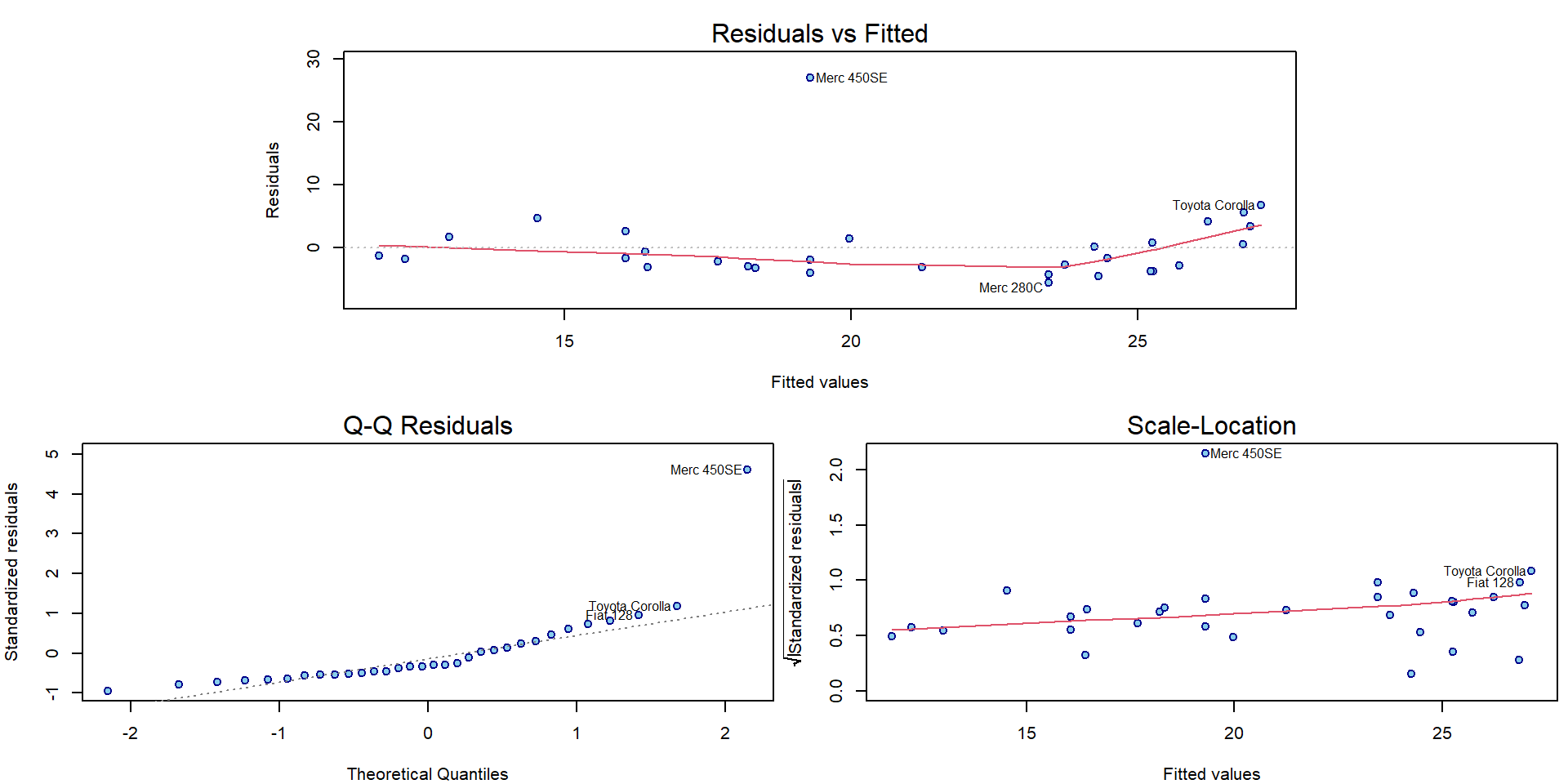

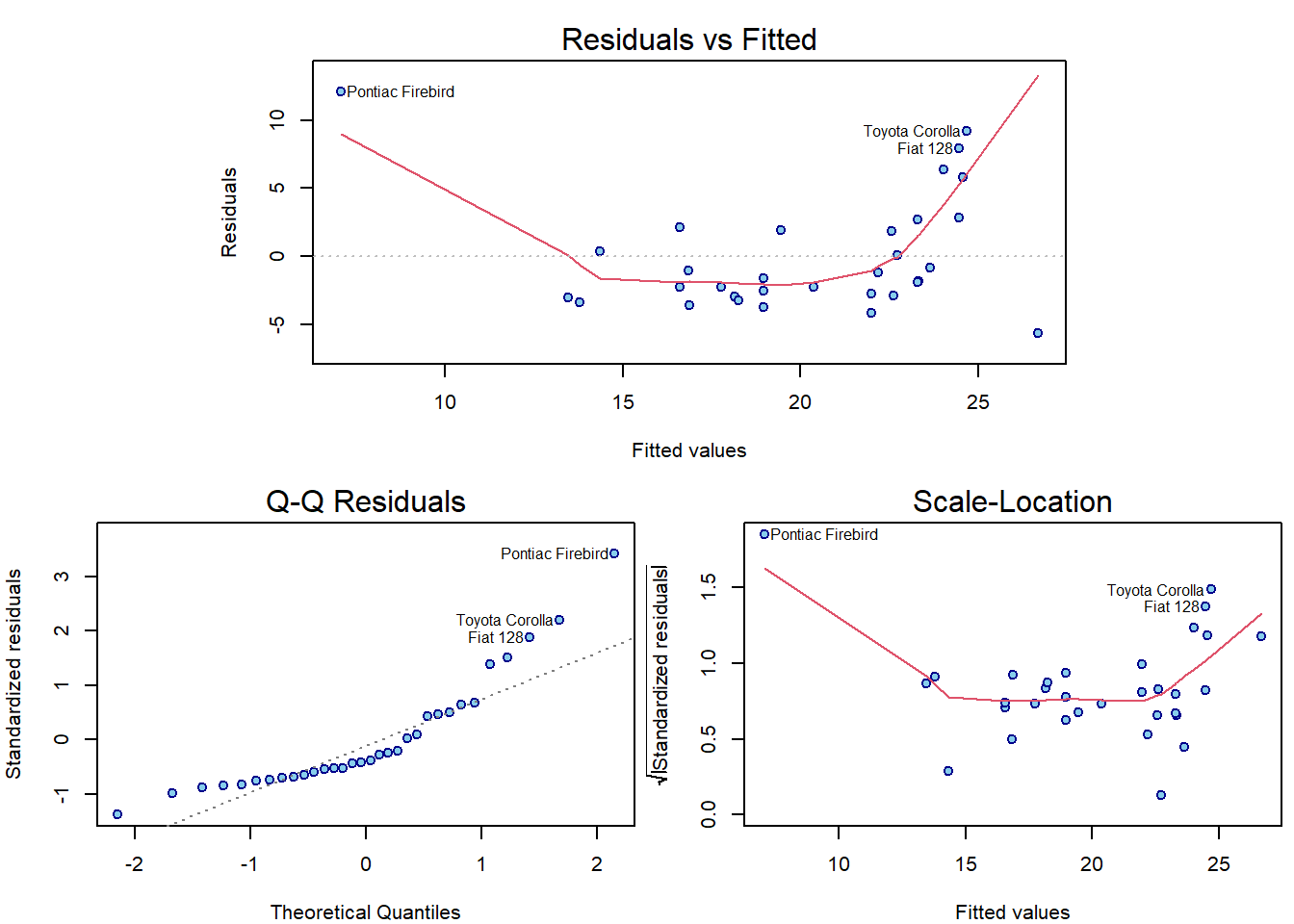

Compare also the graphical diagnostic plots for both models with outlying observations:

par(mar = c(4,4,2.5,0.5))

plotLM(m2)

par(mar = c(4,4,2.5,0.5))

plotLM(m3)

Outlier detection

Consider the outlier model for \(t\)-th observation: \[ \mathtt{m[t]}: \quad \mathbf{Y} | \mathbb{X} \sim \mathsf{N}_n \left(\mathbb{X} \boldsymbol{\beta} + \gamma_t e_{t}, \sigma^2\right) \] where \(\gamma_t\) corresponds to the correction for mis-specified expected value for \(t\)-th observation. The test for the null hypothesis \(\gamma_t = 0\) can, thus, be interpreted as a test of outlyingness. Computing the estimates and p-values by fitting each model is expensive:

detect_out <- sapply(1:nrow(mtcars_out2), function(t){

e_t <- rep(0, nrow(mtcars_out2))

e_t[t] <- 1

m_t <- lm(mpg ~ disp + e_t, data = mtcars_out2)

sm_t <- summary(m_t)

c(gamma = coef(m_t)[3], pval = sm_t$coefficients[3,4])

})

detect_out <- as.matrix(t(detect_out))

rownames(detect_out) <- rownames(mtcars_out2)

detect_out## gamma.e_t pval

## Mazda RX4 -3.63032 6.562e-01

## Mazda RX4 Wag -3.63032 6.562e-01

## Datsun 710 -3.76319 6.482e-01

## Hornet 4 Drive 0.41741 9.591e-01

## Hornet Sportabout 1.42269 8.635e-01

## Valiant -4.20051 6.044e-01

## Duster 360 -3.28998 6.906e-01

## Merc 240D -0.57799 9.437e-01

## Merc 230 -2.48066 7.620e-01

## Merc 280 -5.21529 5.213e-01

## Merc 280C -6.67304 4.108e-01

## Merc 450SE 26.99503 1.940e-04

## Merc 450SL 27.92817 9.792e-05

## Merc 450SLC -5.35392 5.093e-01

## Cadillac Fleetwood -3.53428 6.839e-01

## Lincoln Continental -3.98224 6.440e-01

## Chrysler Imperial 0.19517 9.818e-01

## Fiat 128 5.46854 5.103e-01

## Honda Civic 3.18599 7.023e-01

## Toyota Corolla 6.84209 4.103e-01

## Toyota Corona -4.66210 5.703e-01

## Dodge Challenger -3.53101 6.660e-01

## AMC Javelin -4.34651 5.940e-01

## Camaro Z28 -4.71529 5.667e-01

## Pontiac Firebird 3.57671 6.694e-01

## Fiat X1-9 -0.06203 9.941e-01

## Porsche 914-2 0.11717 9.886e-01

## Lotus Europa 3.88661 6.387e-01

## Ford Pantera L -2.01575 8.070e-01

## Ferrari Dino -5.57187 4.946e-01

## Maserati Bora -4.66233 5.671e-01

## Volvo 142E -4.73213 5.644e-01From lecture we know that the estimates can be obtained as \(\widehat{\gamma}_t = U_t / m_{tt}\):

deleted_residual <- residuals(m3)/(1-hatvalues(m3))

all.equal(detect_out[,1], deleted_residual)## [1] TRUEwhich is often called a deleted residual. Then, an estimate for the variance is \[ \widehat{\mathsf{var}} \left[\widehat{\gamma}_t | \mathbb{X}\right] = \widehat{\frac{\sigma^2}{m_{tt}}} = \frac{1}{m_{tt}} \mathsf{MS}_{e,t}^{out} = \frac{1}{m_{tt}} \mathsf{MS}_{e} \dfrac{n-r-(U_t^{std})^2}{n-r-1} \] which is used to obtain p-values:

vars <- 1/(1-hatvalues(m3)) * sum(residuals(m3)^2)/m3$df.residual * (m3$df.residual-stdres(m3)^2)/(m3$df.residual - 1)

tstats <- deleted_residual / sqrt(vars)

pvals <- 2 * pt(abs(tstats), df=m3$df.residual-1, lower.tail = FALSE)

all.equal(detect_out[,2], pvals)## [1] TRUEHowever, we are facing multiple testing problem, hence, p-values should be adjusted by e.g. Bonferroni correction (multiply p-value by number of tests, cut if exceeds 1):

(pval_bonf <- pmin(1, pvals * length(pvals)))## [1] 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000

## [12] 0.006208 0.003134 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000

## [23] 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000 1.000000NEVER exclude observations in an automatic manner! It depends on the underlying model, which may not be suitable. Exclude observations only under reasonable justification:

- Is it data error?

- Is the assumed model correct?

- Can we explain the unusual observation?

- Are we interested in capturing this anomally?

2. Leverage points

Unlike the outlying observations, the leverage points are atypical with respect to the domain of the covariate - the \(x\) axis. Similarly as before, we will introduce some leverage points in the dataset and we will investigate the effect on the final model.

mtcars_lev1 <- mtcars

i3 <- 1 # try also 20

mtcars_lev1$disp[i3] <- 0

summary(m4 <- lm(mpg ~ disp , data = mtcars_lev1))##

## Call:

## lm(formula = mpg ~ disp, data = mtcars_lev1)

##

## Residuals:

## Min 1Q Median 3Q Max

## -7.614 -2.135 -0.872 2.079 7.971

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 28.61379 1.27520 22.44 < 2e-16 ***

## disp -0.03776 0.00492 -7.68 1.4e-08 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 3.56 on 30 degrees of freedom

## Multiple R-squared: 0.663, Adjusted R-squared: 0.652

## F-statistic: 59 on 1 and 30 DF, p-value: 1.44e-08Visually:

par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plot(mpg ~ disp, data = mtcars_lev1,

xlab = expression(Displacement~"["~inch^3~"]"), ylab = "Miles per Gallon",

pch = 22, bg = "lightblue", col = "navyblue")

points(mtcars$mpg[i3] ~ mtcars$disp[i3], pch = 22, bg = "lightblue", col="navyblue", cex = 2)

arrows(x0 = mtcars$disp[i3], x1 = mtcars_lev1$disp[i3], y0 = mtcars$mpg[i3])

points(mtcars_lev1$mpg[i3] ~ mtcars_lev1$disp[i3], pch = 22, bg = "red", cex = 2)

abline(m1, col = "blue", lwd = 2)

abline(m4, col = "red", lwd = 2)

And, again, the second leverage point can make the whole situation even worse:

mtcars_lev2 <- mtcars_lev1

i4 <- 25 # try also 15

mtcars_lev2$disp[i4] <- mtcars_lev2$disp[i4] + 300

summary(m5 <- lm(mpg ~ disp , data = mtcars_lev2))##

## Call:

## lm(formula = mpg ~ disp, data = mtcars_lev2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -5.68 -2.93 -1.73 1.99 12.13

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 26.67608 1.42922 18.66 < 2e-16 ***

## disp -0.02801 0.00513 -5.46 6.3e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 4.34 on 30 degrees of freedom

## Multiple R-squared: 0.498, Adjusted R-squared: 0.482

## F-statistic: 29.8 on 1 and 30 DF, p-value: 6.34e-06is <- c(i3, i4)

par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plot(mpg ~ disp, data = mtcars_lev2,

xlab = expression(Displacement~"["~inch^3~"]"), ylab = "Miles per Gallon",

pch = 22, bg = "lightblue", col = "navyblue")

points(mtcars$mpg[is] ~ mtcars$disp[is], pch = 22, bg = "lightblue", col="navyblue", cex = 2)

arrows(x0 = mtcars$disp[is], x1 = mtcars_lev2$disp[is], y0 = mtcars$mpg[is])

points(mtcars_lev2$mpg[is] ~ mtcars_lev2$disp[is], pch = 22, bg = "red", cex = 2)

abline(m1, col = "blue", lwd = 2)

abline(m4, col = "red", lwd = 2)

abline(m5, col = "red4", lwd = 2)

Note, that both leverage points are tied with response values close

to the mean. They are only atypical with respect to the displacement

value. However, according to the model, these response values are

atypical for such extreme disp values and, hence, could be

considered outliers as well.

par(mar = c(4,4,2.5,0.5))

plotLM(m5)

Individual work

- Compare the estimated parameters for all three models and investigate the effect of the introduced leverage points.

- Note, that the leverage points do not have that much effect (compared to the outlying observations) if they are supposed to be only atypical with respect to the covariate domain (and not with respect to the response).

Leverage point detection

The diagonal element \(h_{tt} = \mathbf{x}_t^\top \left(\mathbb{X}^\top\mathbb{X}\right)^{-1} \mathbf{x}_t\) of hat matrix \(\mathbb{H} = \mathbb{X} \left(\mathbb{X}^\top\mathbb{X}\right)^{-1} \mathbb{X}^\top\) is called t-th leverage. In model with intercept the model can be rewritten with centered regressors, which yields \[ h_{tt} = \frac{1}{n} + (x_{t1} - \overline{x}_{1}, \ldots, x_{tp} - \overline{x}_{p}) \; \mathbb{Q} \; (x_{t1} - \overline{x}_{1}, \ldots, x_{tp} - \overline{x}_{p})^\top \] where \(\mathbb{Q} = \left(\widetilde{\mathbb{X}}^\top \widetilde{\mathbb{X}}\right)^{-1}\) and \(\widetilde{\mathbb{X}} = \left(\mathbf{X}_1-\overline{x}_1 \;|\; \cdots \;|\; \mathbf{X}_p-\overline{x}_p \right)\). We immediately see that \(h_{tt}\) expresses how distant the \(t\)-th observation is from the centers \(\overline{x}_{j}\). Moreover, it is obvious that the response values do not play any role here.

Remember that \[ \mathsf{var} \left[U_i | \mathbb{X}\right] = \mathsf{var} \left[Y_i - \widehat{Y}_i | \mathbb{X}\right] = \sigma^2 m_{ii} = \sigma^2 ( 1 - h_{ii}) \] so the residuals have lower variance with higher leverages. Thus, fitted values of high leverage observations are forced to be closer to the observed response values than those of low leverage observations.

cbind(1:nrow(mtcars), hatvalues(m1), hatvalues(m4), hatvalues(m5))## [,1] [,2] [,3] [,4]

## Mazda RX4 1 0.04175 0.12855 0.10851

## Mazda RX4 Wag 2 0.04175 0.03950 0.03913

## Datsun 710 3 0.06288 0.05772 0.05383

## Hornet 4 Drive 4 0.03281 0.03324 0.03198

## Hornet Sportabout 5 0.06635 0.06568 0.05306

## Valiant 6 0.03132 0.03125 0.03139

## Duster 360 7 0.06635 0.06568 0.05306

## Merc 240D 8 0.04608 0.04318 0.04217

## Merc 230 9 0.04823 0.04502 0.04368

## Merc 280 10 0.03962 0.03770 0.03762

## Merc 280C 11 0.03962 0.03770 0.03762

## Merc 450SE 12 0.03552 0.03604 0.03357

## Merc 450SL 13 0.03552 0.03604 0.03357

## Merc 450SLC 14 0.03552 0.03604 0.03357

## Cadillac Fleetwood 15 0.15350 0.14708 0.10970

## Lincoln Continental 16 0.14165 0.13607 0.10196

## Chrysler Imperial 17 0.12323 0.11894 0.08994

## Fiat 128 18 0.07978 0.07253 0.06544

## Honda Civic 19 0.08172 0.07423 0.06677

## Toyota Corolla 20 0.08476 0.07691 0.06885

## Toyota Corona 21 0.05695 0.05256 0.04974

## Dodge Challenger 22 0.04725 0.04751 0.04086

## AMC Javelin 23 0.04253 0.04295 0.03789

## Camaro Z28 24 0.06113 0.06075 0.04971

## Pontiac Firebird 25 0.09143 0.08926 0.33338

## Fiat X1-9 26 0.07959 0.07236 0.06531

## Porsche 914-2 27 0.05686 0.05248 0.04967

## Lotus Europa 28 0.06988 0.06384 0.05865

## Ford Pantera L 29 0.06163 0.06122 0.05003

## Ferrari Dino 30 0.04668 0.04369 0.04260

## Maserati Bora 31 0.04162 0.04207 0.03732

## Volvo 142E 32 0.05653 0.05219 0.04945A rule of thumb for leverage detection:

which(hatvalues(m5) > 3 * m5$rank / nrow(mtcars_lev2))## Pontiac Firebird

## 25Individual work

- Consider a situation, where both types of atypical observations are introduced in the model - the leverage points and, also, the outlying observations.

- How much bad can the model become with bad leverage points and bad outlying observations?

3. Cook’s distance and other influence measures

In general, ?influence.measures provides many other

measures on finding influential observations.

infm <- influence.measures(m5)

head(infm$infmat)## dfb.1_ dfb.disp dffit cov.r cook.d hat

## Mazda RX4 -0.49126 0.41453 -0.49126 1.052 0.116840 0.10851

## Mazda RX4 Wag -0.04788 0.02504 -0.05579 1.108 0.001606 0.03913

## Datsun 710 -0.04520 0.03064 -0.04731 1.128 0.001156 0.05383

## Hornet 4 Drive 0.03301 0.01241 0.08196 1.090 0.003451 0.03198

## Hornet Sportabout -0.01507 0.07481 0.11669 1.111 0.006985 0.05306

## Valiant -0.05608 0.00638 -0.09469 1.084 0.004594 0.03139apply(infm$is.inf, 2, which)## dfb.1_ dfb.disp dffit cov.r cook.d hat

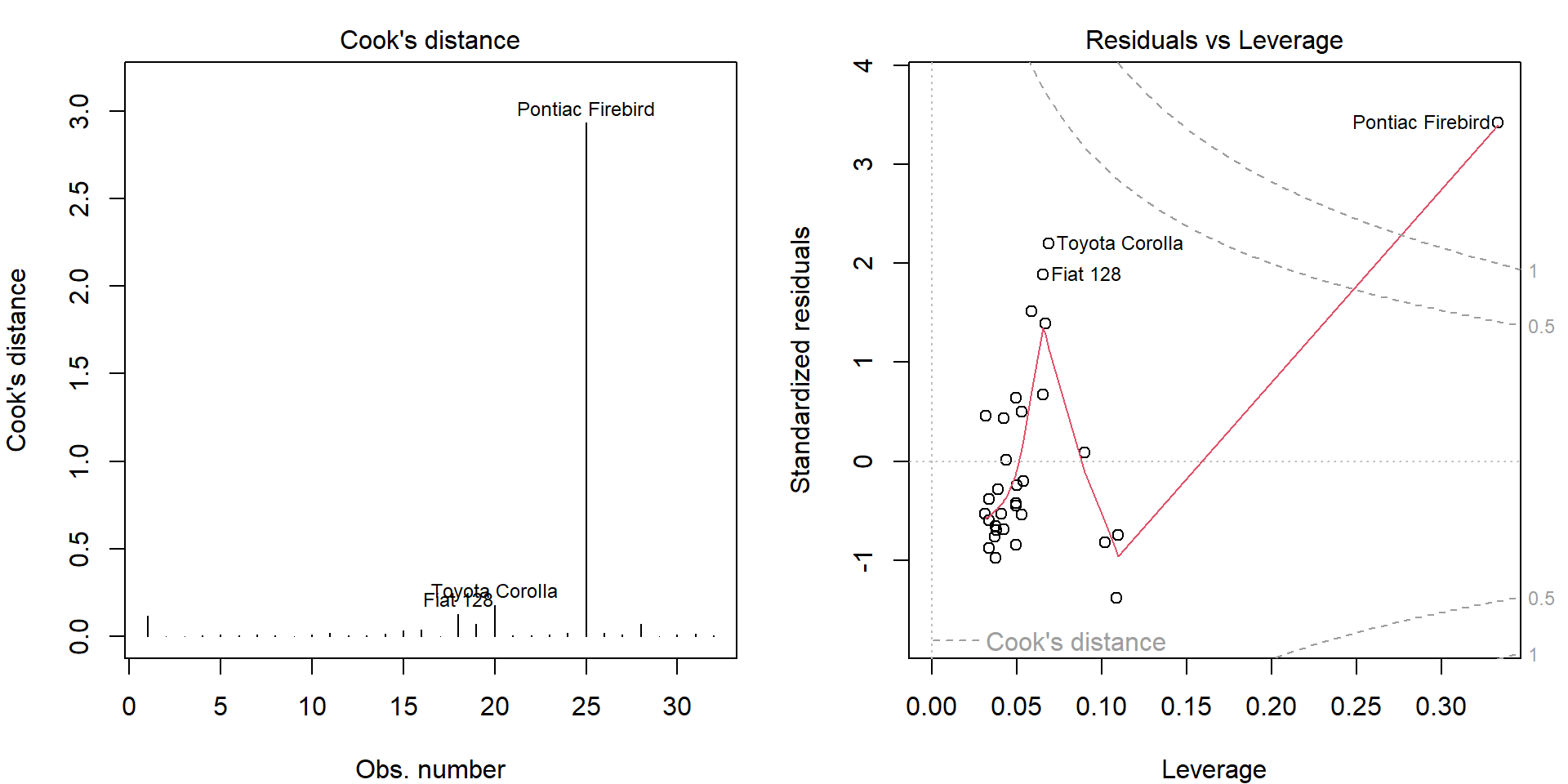

## 25 25 25 25 25 25We will only show enough to understand the two default plots for linear models:

par(mfrow = c(1,2), mar = c(4,4,2,1.5))

plot(m5, which = 4:5)

- Standardized residuals: \(U_i^{std} = U_i / \sqrt{\mathsf{MS}_e m_{ii}}\) have zero mean and unit variance.

- Leverages: \(h_{tt}\) measure the irregularity in regression space.

- Cook’s distance: \(D_t = \frac{1}{r \mathsf{MS}_e} \|\widehat{\mathbf{Y}} - \widehat{\mathbf{Y}}_{(-t\bullet)}\|^2 = \frac{1}{r} \frac{h_{tt}}{m_{tt}} \left(U_t^{std}\right)^2\) measures the difference in fitted values estimated by excluding \(t\)-th observation (up to the scale).

Notice that the top 3 observations in Cook’s distance are the

labelled observations in plot.lm() plots.

Given a distance \(d \in \mathbb{R}\) we can express standardized residuals as two functions of \(d\) and leverage \(h\): \(u_{1,2}(h,d) = \pm\sqrt{rd\frac{1-h}{h}}\), which are the dashed grey lines on the right hand side.

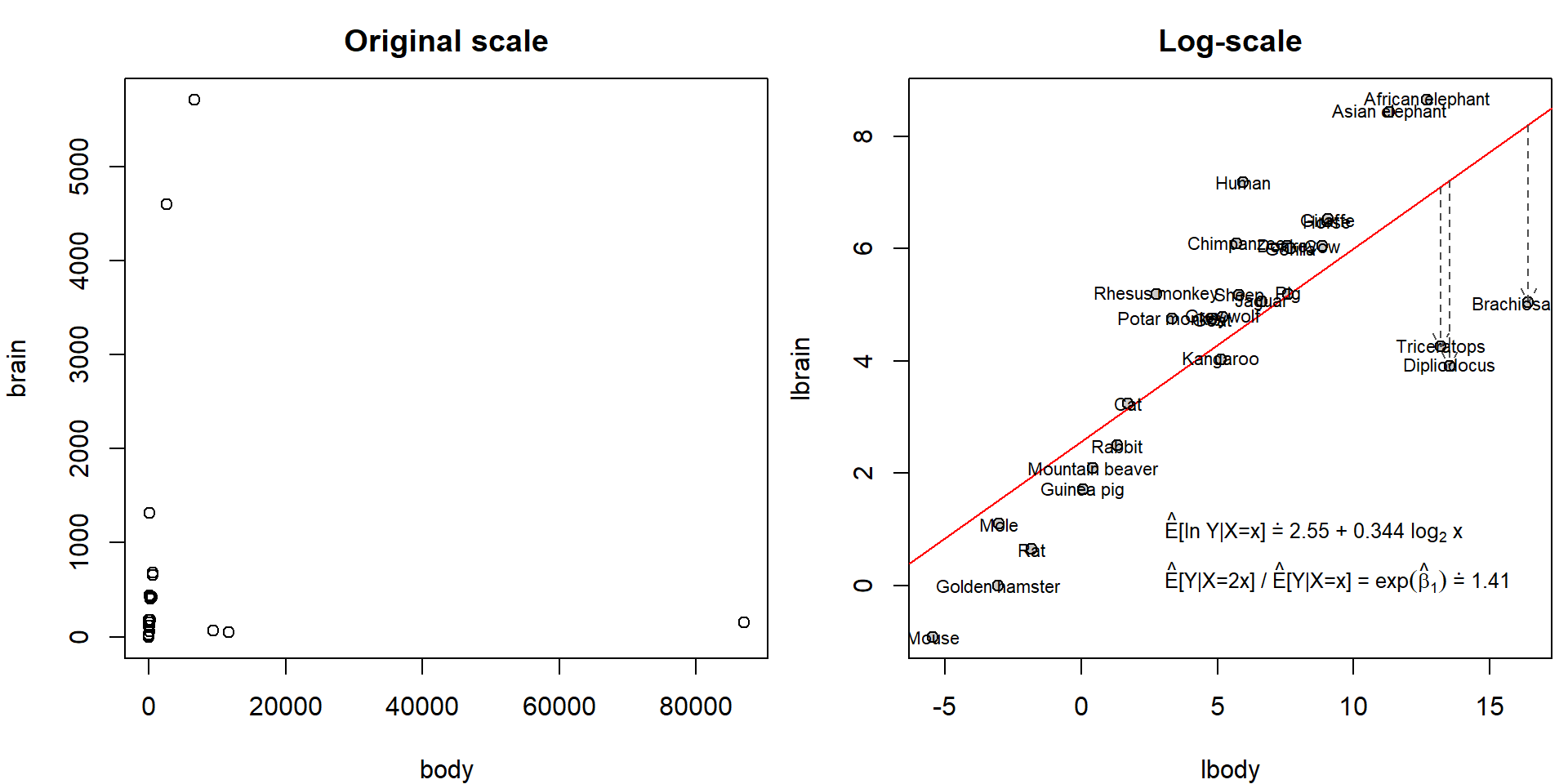

Real data example - Animals

We will explore data on animals containing information about the body weight (in kg) and brain weight (in g). See how widely spread the values are:

data(Animals, package = "MASS")

head(Animals)## body brain

## Mountain beaver 1.35 8.1

## Cow 465.00 423.0

## Grey wolf 36.33 119.5

## Goat 27.66 115.0

## Guinea pig 1.04 5.5

## Dipliodocus 11700.00 50.0summary(Animals)## body brain

## Min. : 0.02 Min. : 0.4

## 1st Qu.: 3.10 1st Qu.: 22.2

## Median : 53.83 Median : 137.0

## Mean : 4278.44 Mean : 574.5

## 3rd Qu.: 479.00 3rd Qu.: 420.0

## Max. :87000.00 Max. :5712.0Hence, original scale is not suitable for modelling purposes. However, on log-scale we observe nice linear trend:

Animals <- transform(Animals, lbody = log2(body), lbrain = log(brain))

par(mfrow = c(1,2), mar = c(4,4,2.5,0.5))

plot(brain ~ body, Animals, main = "Original scale")

plot(lbrain ~ lbody, Animals, main = "Log-scale", bg = "grey80", pch = 21)

abline(mall <- lm(lbrain ~ lbody, data = Animals), col = "red")

dinosaurs <- c("Brachiosaurus", "Dipliodocus", "Triceratops")

arrows(x0 = Animals[dinosaurs, "lbody"], y0 = fitted(mall)[dinosaurs], y1 = Animals[dinosaurs, "lbrain"],

col = "grey30", lty = 2, length = 0.1)

text(lbrain ~ lbody, data = Animals, labels = rownames(Animals), cex = 0.7)

text(2.5, 1, pos = 4, cex = 0.8,

labels = eval(substitute(expression(paste(hat(E), "[ln Y|X=x]", dot(" = "),

be0, " + ", be1, " ", log[2], " x", sep = "")),

list(be0 = format(coef(mall)[1], digits = 3),

be1 = format(coef(mall)[2], digits = 3)))))

text(2.5, 0.1, pos = 4, cex = 0.8,

labels = eval(substitute(expression(paste(hat(E), "[Y|X=2x] / ", hat(E), "[Y|X=x] = ",

exp(hat(beta)[1]), dot(" = "), eb1 , sep = "")),

list(eb1 = format(exp(coef(mall)[2]), digits = 3))))) However, it seems that the fitted line is heavily influenced by three

observations: Brachiosaurus, Dipliodocus and Triceratops. Unlike other

observations, these three “animals” are extinct for millions of years.

These exemplars didn’t have the same amount of time to evolve their

brain wrt body. They also didn’t stand the test of time and natural

selection.

However, it seems that the fitted line is heavily influenced by three

observations: Brachiosaurus, Dipliodocus and Triceratops. Unlike other

observations, these three “animals” are extinct for millions of years.

These exemplars didn’t have the same amount of time to evolve their

brain wrt body. They also didn’t stand the test of time and natural

selection.

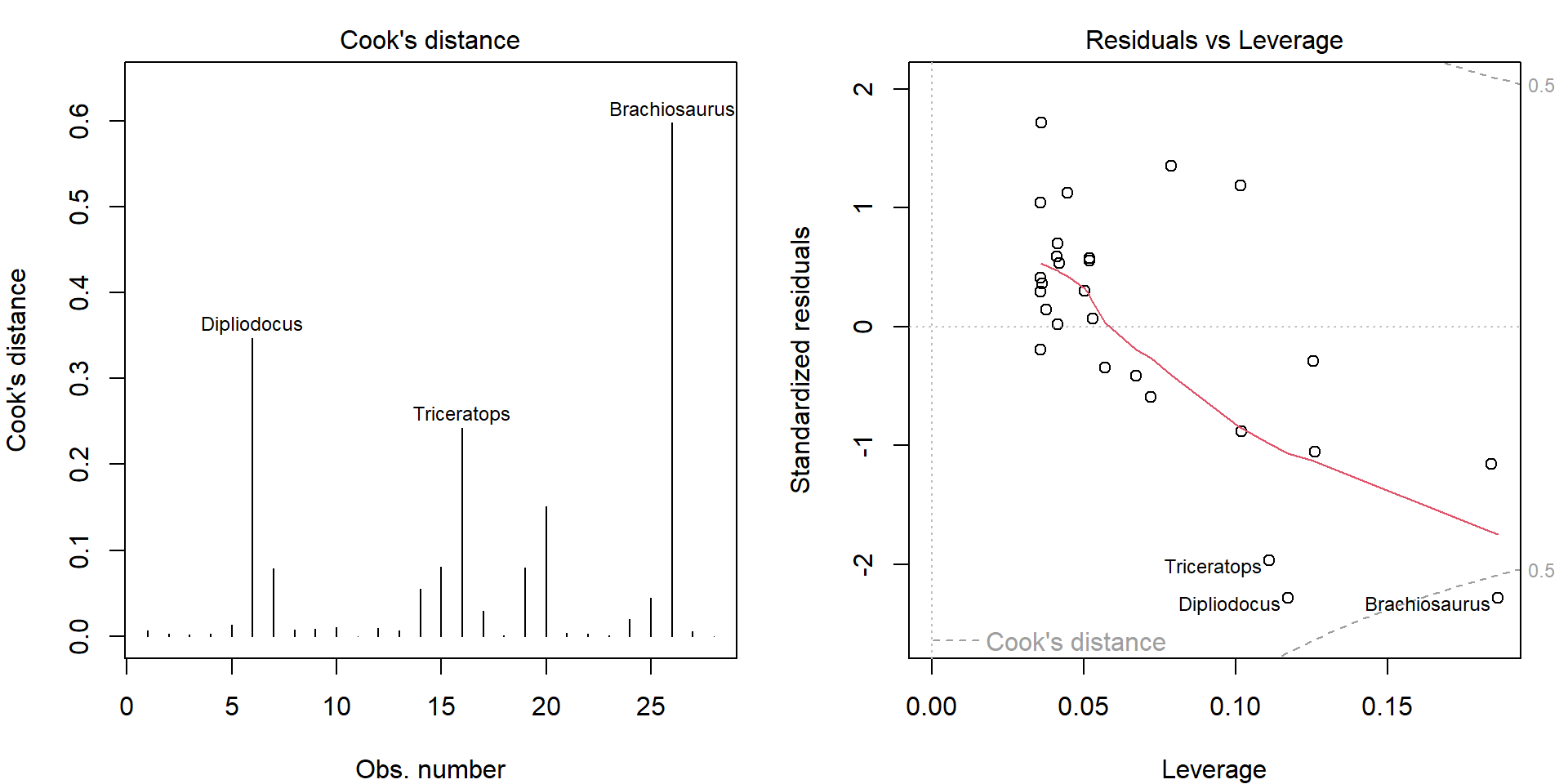

Before we decide to exclude them, let’s explore the corresponding influential measures:

par(mfrow = c(1,2), mar = c(4,4,2,1.5))

plot(mall, which = 4:5)

Clearly, these three observations dominate in Cook’s distance. Top 6 observations in terms of deleted residuals:

deleted_residuals <- residuals(mall)/(1-hatvalues(mall))

head(sort(abs(deleted_residuals), decreasing = TRUE))## Brachiosaurus Dipliodocus Triceratops Human Asian elephant Mouse

## 3.878 3.726 3.199 2.680 2.160 1.961Top 6 observations in terms of hat values:

head(sort(hatvalues(mall), decreasing = TRUE))## Brachiosaurus Mouse Golden hamster Mole Dipliodocus Triceratops

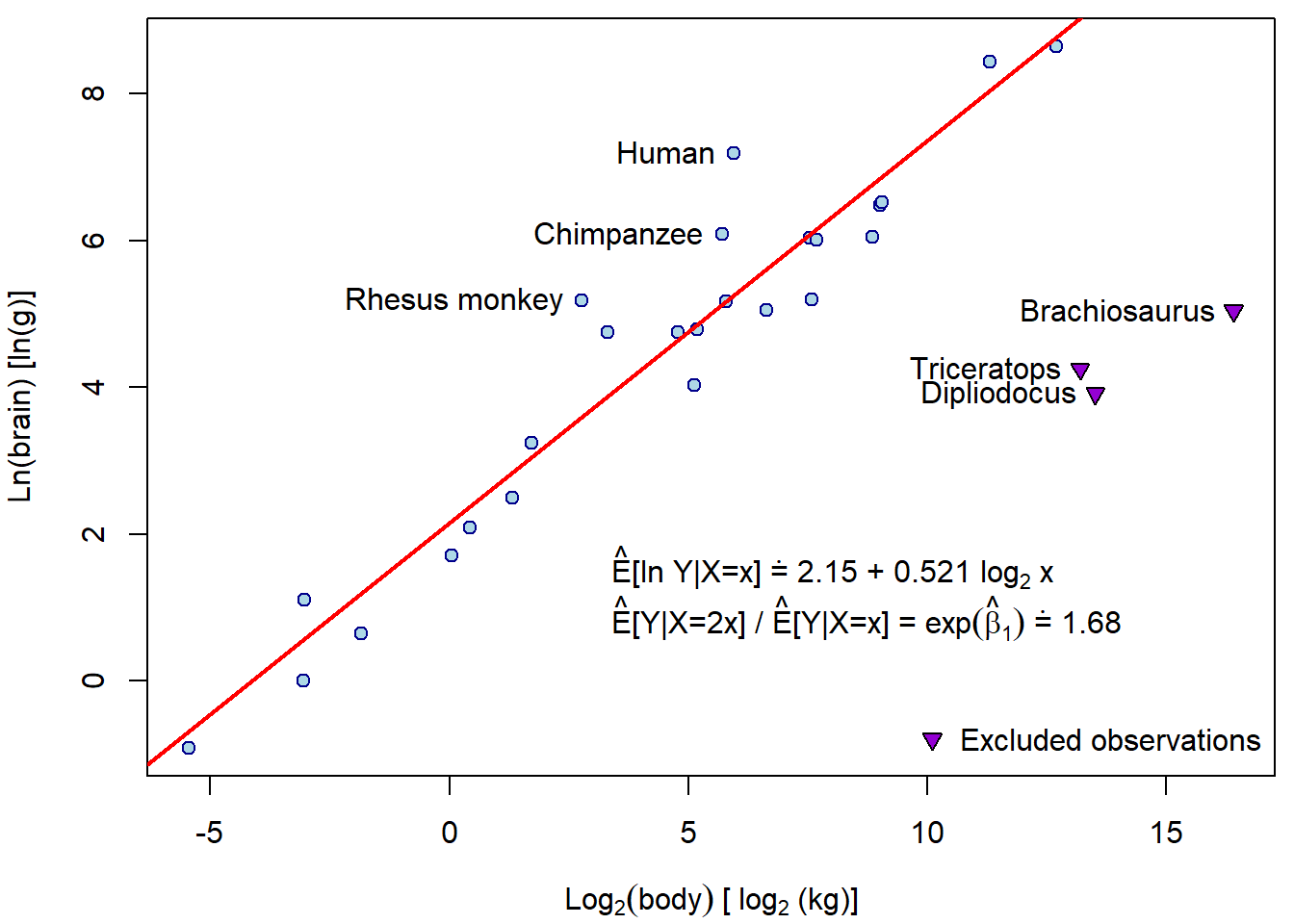

## 0.1863 0.1840 0.1261 0.1256 0.1173 0.1110Let’s compare the difference in the estimated line after exclusion of dinosaurs:

exclude <- c("Brachiosaurus", "Dipliodocus", "Triceratops")

keep <- setdiff(rownames(Animals), exclude)

subAnimals <- Animals[keep,]

msub <- lm(lbrain ~ lbody, data = subAnimals)

par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plot(lbrain ~ lbody, data = subAnimals, pch = 21, col = "darkblue", bg = "lightblue",

xlim = range(Animals$lbody),

xlab = expression(Log[2](body)~"["~log[2]~"(kg)]"), ylab = "Ln(brain) [ln(g)]")

abline(msub, col = "red", lwd = 2)

text(3, 1.5, pos = 4,

labels = eval(substitute(expression(paste(hat(E), "[ln Y|X=x]", dot(" = "),

be0, " + ", be1, " ", log[2], " x", sep = "")),

list(be0 = format(coef(msub)[1], digits = 3),

be1 = format(coef(msub)[2], digits = 3)))))

text(3, 0.8, pos = 4,

labels = eval(substitute(expression(paste(hat(E), "[Y|X=2x] / ", hat(E), "[Y|X=x] = ",

exp(hat(beta)[1]), dot(" = "), eb1 , sep = "")),

list(eb1 = format(exp(coef(msub)[2]), digits = 3)))))

points(lbrain ~ lbody, data = Animals[exclude,], pch = 25, col = "black", bg = "darkviolet")

text(lbrain ~ lbody, data = Animals[exclude,], labels = exclude, pos = 2)

top3res <- order(abs(residuals(msub)), decreasing = TRUE)[1:3]

text(lbrain ~ lbody, data = subAnimals[top3res,], labels = rownames(subAnimals)[top3res], pos = 2)

legend("bottomright", legend = "Excluded observations", bty = "n",

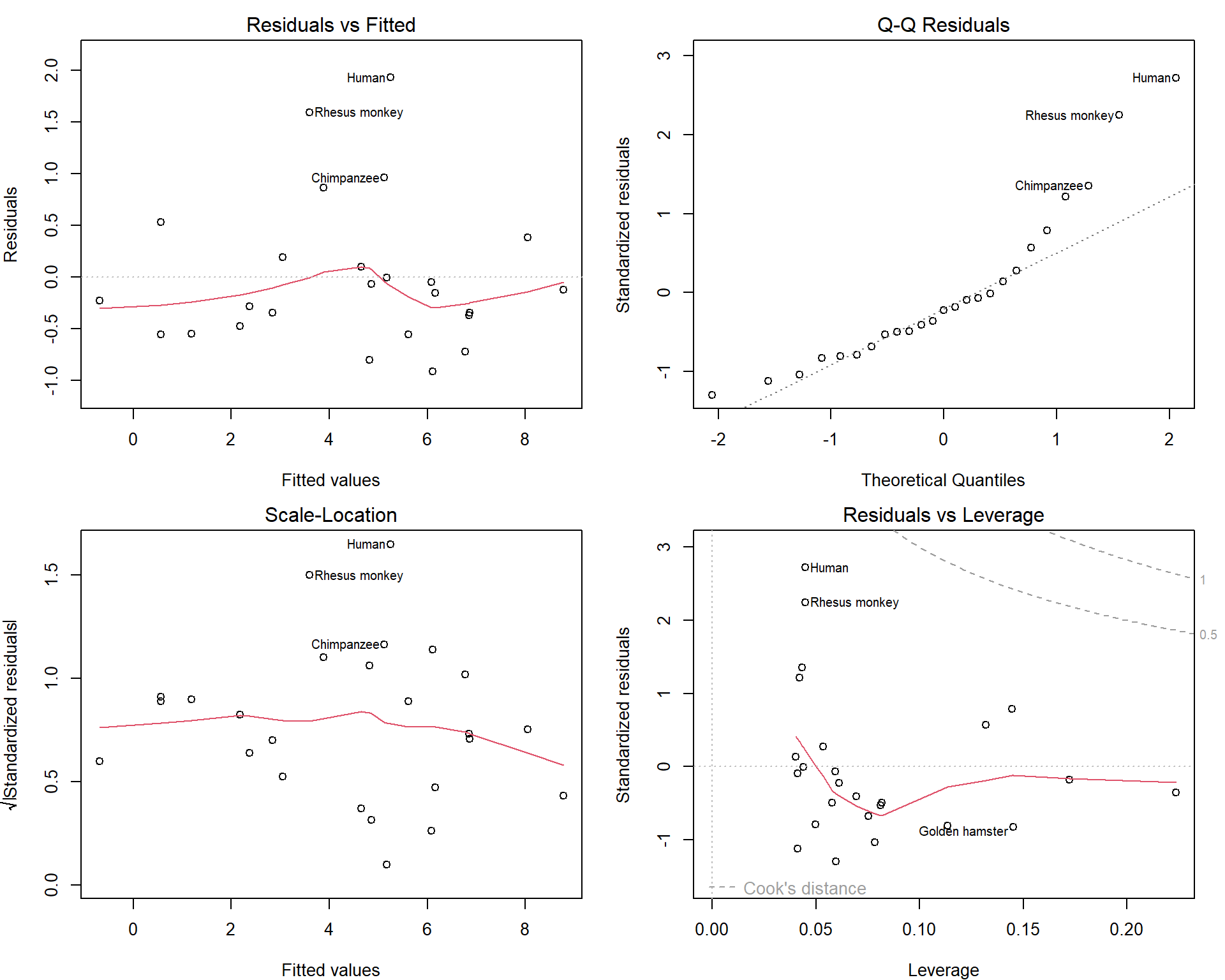

pch = 25, pt.bg = "darkviolet") How do the diagnostic plots look like now?

How do the diagnostic plots look like now?

par(mfrow = c(2,2), mar = c(4,4,2,1.5))

plot(msub, which = c(1:3,5))

Maybe we should consider excluding Human as well… But this depends on the purpose of our model.